In my last post, I talked about how Large Language Models (LLMs) like ChatGPT are going to get better because they will incorporate a sense of reward seeking. Now I want to talk about how they’re going to improve by incorporating better memory for events. This will allow tools like ChatGPT to remember previous conversations.

If you use Alexa or the Google Assistant, it’s capable of remembering facts about you. It can remember your name, your favorite sports team, or your mom’s phone number. They don’t do a very good job and LLMs have the potential to be better. I expect this is a big change that we’ll see in the coming year.

Technical Background

LLMs have a context window, for ChatGPT it’s about 3,000 words, and they are unable to have short-term memory outside of that window. Research efforts like LongFormer have tried to increase this context size. But it’s clear that the strategy of indiscriminately dumping more and more content into the prompt has limits.

They can remember information that they’ve been trained on, which includes all of Wikipedia, and millions of posts from Reddit and other places on the web, and also books, movie transcripts, news articles, and much more. But this background memory is processed and used differently than the prompt. So we expect there to be room for a memory system like the one that humans use for recent events.

How it will be Done

The user could recall memories with a prompt like “Remember what I told you about the trip I’m planning? I want to revise it so that we leave in June instead of March. Can you suggest changes?”

There are two big ways of doing this: structured or unstructured data.

Memory could be structured data, like the Google knowledge graph, which is how the Google Assistant remembers your phone number or movies you like. Structured data is inherently difficult to produce, but ChatGPT is able to produce it.

Memory could also be an unstructured collection of ideas, maybe stored as text or numerically as vectors. This is more like how Google search treats webpages, as unconstrained objects that could contain anything. Recently we’ve seen projects like BabyAGI or AutoGPT, which use tools like Pinecone that can do vector search. I expect that this approach is going to be more widely used to augment LLMs.

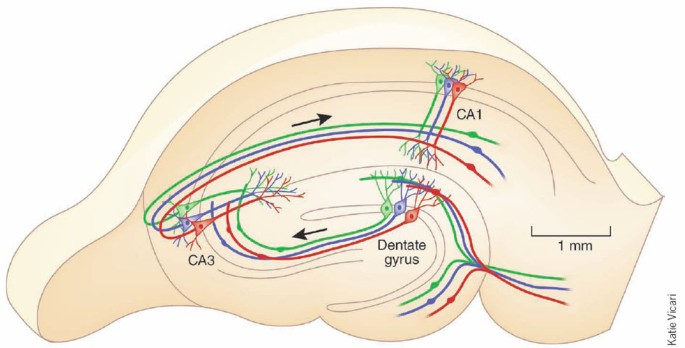

In the human brain, the Hippocampus assists in recall for recent memories. The neural details are complicated, but one way of thinking about it is that it searches for stored memory vectors that are similar to your current situation.

When

This work is already ongoing by AI companies. I think this sort of integration is perceived as somewhat obvious. Indeed we already see small projects that are doing this.

I predict that by the beginning of 2024, there will be widely used consumer AIs that incorporate a memory like this, and you’ll be able to reference previous interactions when you talk to it.